0110010010010: artificial intelligence systems on screen communicate in what seems like complete gibberish. Many digital program creators no longer know how digital robots communicate or how they make decisions. “It’s like watching a sci-fi movie where machines start secretly talking to each other,” Pranav Reduchintala-Sai-Balaji, a PhD candidate at Yeungnam University, said. “But it’s not comforting. It’s like a game we never signed up for, and now we don’t know the rules.”

As the world grapples with responsible AI management, South Korea stands out for its unconventional approach: it embeds AI ethics into education. Rather than wait for regulations to catch up, the country incorporated ethics as a foundational aspect of the program and created a roadmap for the rest of the world.

The Wake-Up Call

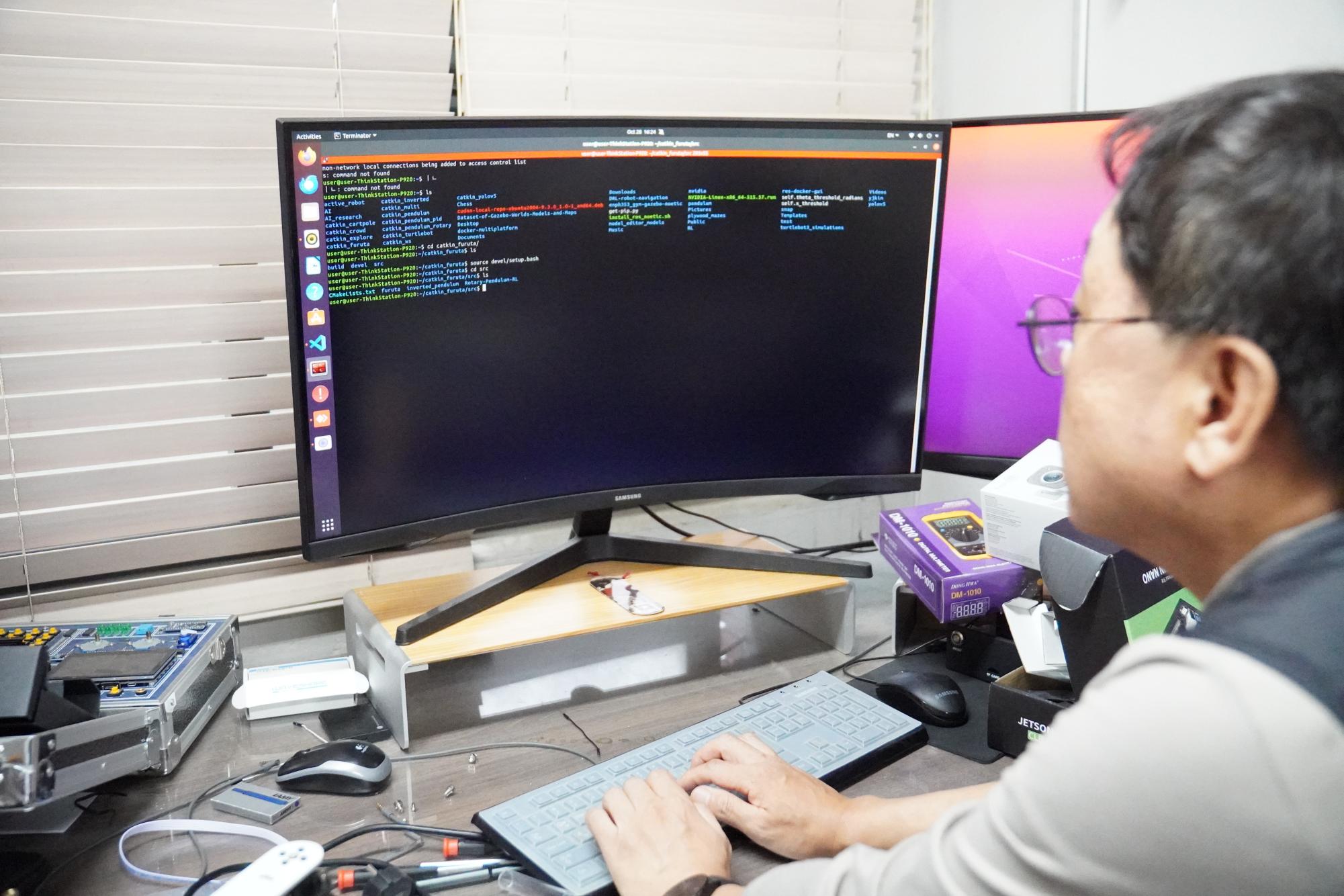

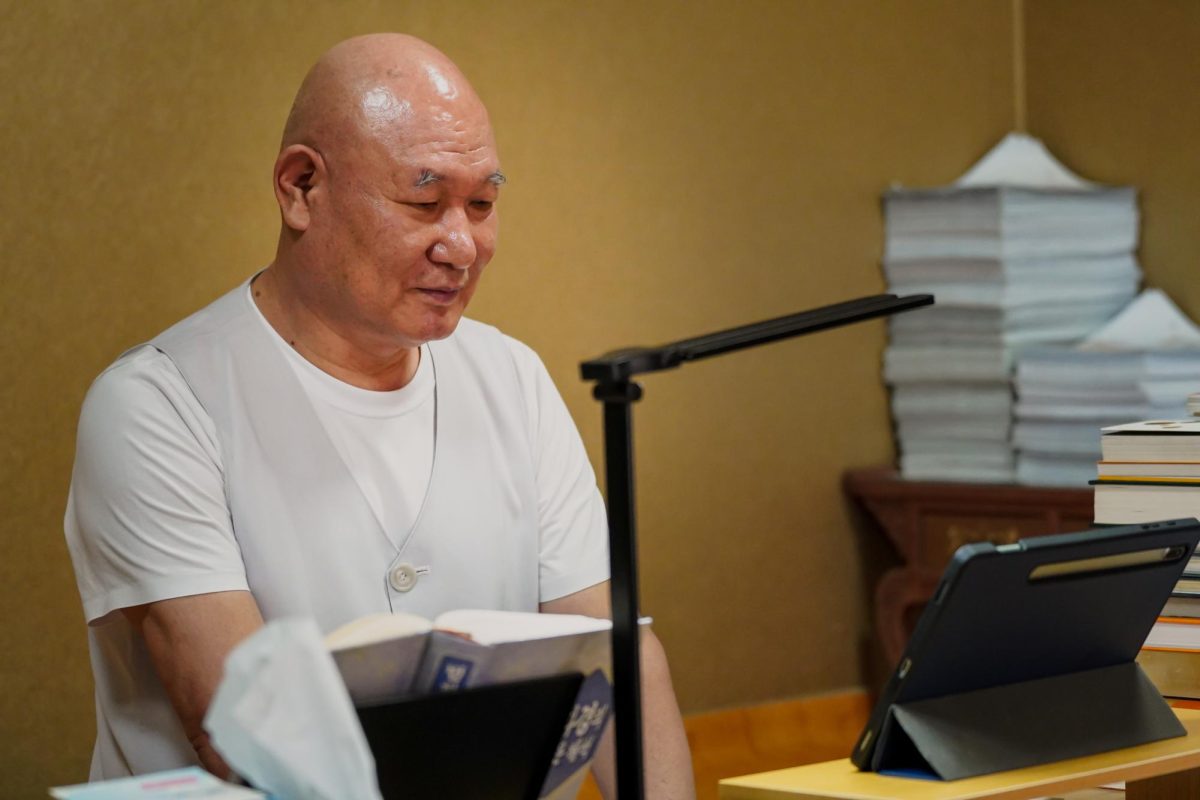

South Korea’s initiative evolved gradually. Seeds of change sprouted as AI began to infiltrate everyday life, from Kakao Taxis that remember your favorite navigation maps to café kiosks. These innovations brought convenience but also raised difficult questions. “Even I can’t keep track of all the new AI apps anymore,” Dr. Jaesool Shim, a Harvard-MIT educated machine learning professor at Yeungnam University, said. “It’s like they multiply overnight. AI is everywhere now—your phone, your hospital, your bank. It’s exciting, but it also makes you pause.”

Shim points to the work of Nobel Laureate Geoffrey Hinton, who spent decades at the forefront of machine learning, and recently stepped back from the field. He warned mankind of AI’s potential to spiral out of human control. “Even Hinton, the man who helped create modern AI, has said he’s scared,” Shim said. “When someone like that expresses fear, you take notice. It’s a wake-up call.”

Hinton’s departure sparked conversations worldwide, which included South Korea. Policymakers and educators realized the need to address AI’s ethical implications—not just for developers but for everyone affected by it.

Shaping the Next Generation

At South Korean universities, the proposed AI curriculum challenges students to think critically about the impact of technology. For Pranav, the shift felt abrupt at first. “I couldn’t understand why they were changing the curriculum,” he admits. “It felt like they were hiding something. But then I realized, they were preparing us.”

The courses force students to confront AI’s dual nature. On one side lies the potential for groundbreaking advancements. “AI makes life efficient,” Pranav said. “It’s like having a superpower. During COVID-19, I worked on AI models predicting patient outcomes. Knowing the tech could save lives felt amazing.”

However, students cannot ignore the other side of the coin. Pranav compares the technology to a video game, where the stakes involve life and death. “You’re playing against an opponent that keeps evolving, and sometimes it feels like the game is rigged,” he said. “You don’t know what the next move will be—or if you’ll even understand it when it happens.”

South Korea’s approach effectively addresses both grounds. Ethics classes teach students to question beyond the simple mechanics of AI. “Students must learn that just because we can build something doesn’t mean we should,” said Dr. Gyanendra Prasad Joshi, a professor of artificial intelligence at Kangwon National University. “Knowing when to stop is as critical as knowing how to start.”

Additionally, such initiatives protect its users and developers. “It’s like putting seatbelts on a sports car. You still want to go fast, but you need to protect yourself if things go wrong,” Shim said.

Without these protections, the risks reach staggering levels. Already, AI has amplified biases, displaced jobs, and eroded privacy. “Imagine a machine making decisions for you—decisions you can’t challenge because you don’t understand how they were made,” Professor Shim says. “That’s the reality we’re heading toward if we don’t act responsibly.”

South Korea’s unique approach to this problem precedes the rest of the world. “Other countries, like the US, EU, UK, and Japan, talk about regulating AI, but South Korea is planning to embed it into their education,” Dr. Arghya Narayan, a former educator of UC Bolder and Professor of Yeungnam University said. “It’s not just a policy—it’s becoming part of our culture, and that’s what makes it so powerful.”

Restricting Creativity

Unfortunately, not everyone embraces the changes. “It feels like they’re clipping our wings before we’ve learned to fly,” Pranav said. “They’re so afraid AI might ‘kill us all’ that they forget how much good it can do.”

This debate stretches beyond academia. Students express concerns about how these guardrails may stifle creativity. “It’s like they’re trying to turn AI into a strict parent,” Professor Shim jokes. “You follow its decisions without thinking for yourself. That’s why we’re focusing on balance—letting AI help us without letting it take over.”

South Korea’s approach may serve as a potential model for other nations: “It’s not just about creating laws; it’s about shaping a generation that understands AI’s impact,” Professor Joshi said.

As AI develops beyond our control, the stakes couldn’t be higher. “Every day feels like playing a game with AI,” Pranav said.” Will it become the next Einstein or Frankenstein? We don’t know. But at least we’re learning how to play the game right.”

South Korea’s bold experiment doesn’t guarantee success, but it offers hope. “We’re at a crossroads,” says Professor Joshi. “AI could drastically improve life or irreversibly harm it. Education is our first defense—and maybe our best one.”

Sola • Dec 11, 2024 at 12:51 pm

This is sooo coollllll!!!!!!!